Spark context available as 'sc' (master = local, app id = local-1586423376133). To adjust logging level use sc.setLogLevel(newLevel). Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties using builtin-java classes where applicable spark-examples$ spark-shellĢ0/04/09 02:09:25 WARN Utils: Your hostname, ubuntu resolves to a loopback address: 127.0.1.1 using 192.168.192.128 instead (on interface ens33)Ģ0/04/09 02:09:25 WARN Utils: Set SPARK_LOCAL_IP if you need to bind to another addressĢ0/04/09 02:09:26 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform. To show only errors set log4j.rootCategory=ERROR,console in the properties file.Ĭheck the setup by running Spark shell, which is the same as Scala REPL slightly modified. Spark-examples$ cd externals/spark/spark-2.4.5-bin-hadoop2.7/confĬonf$ cp log4j.properties Since Spark produces a lot of log information, you may choose to reduce the verbosity by changing the logging level. Spark configuration files are in the $SPARK_HOME/conf directory.

# SPARK-8333: Exception while deleting Spark temp dir It can be suppressed by modifying the log4j.properties file.

#Download spark 2.2.0 bin hadoop2.7 windows#

It’s a conflict of hadoop with Windows filesystem. SPARK-8333 causes an exception while deleting a Spark temp directory on Windows, so deploying it is not recommended. Set the environment variable HADOOP_HOME to the parent directory of bin/winutils.exe set HADOOP_HOME=%SPARK_HOME%\hadoop Since it is specifically used for Spark, I would put it under the Spark directory, for example, %SPARK_HOME%\hadoop\bin\winutils.exe.

#Download spark 2.2.0 bin hadoop2.7 download#

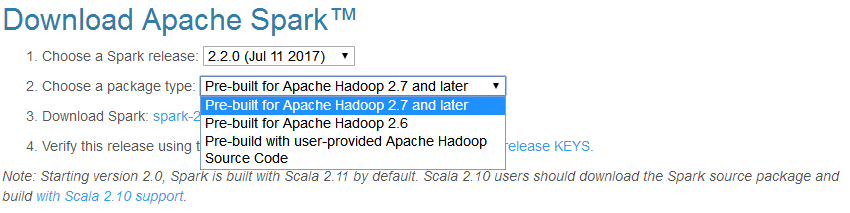

To fix it, download winutils.exe from Hortonworks or Github. Java.io.IOException: Could not locate executable null\bin\winutils.exe in the Hadoop binaries. ERROR Shell: Failed to locate the winutils binary in the hadoop binary path SPARK_HOME=d:\spark-examples\externals\spark\spark-2.4.5-bin-hadoop2.7ĭue to the bug SPARK-2356, Spark produces an error. SPARK_HOME=~/spark-examples/externals/spark/spark-2.4.5-bin-hadoop2.7Įxtract to d:\spark-examples\externals\spark. ~/spark-examples$ tar -xzf ~/Downloads/spark-2.4.5-bin-hadoop2.7.tgz -C externals/spark The notes here works as the next step after setting up a Scala environment detailed in Scala – Getting Started.Ĭhoose a Spark release: 2.4.5 (Feb 05 2020)Ĭhoose a package type : Pre-built for Apache Hadoop 2.7 Linux The challenge however, is in setting up a Scala development environment. Spark is written in Scala, so the Scala API has advantages. Spark supports APIs in Scala, Python, Java and R. Creating a Spark application in Scala in stand-alone mode on a localhost is pretty straight forward.

0 kommentar(er)

0 kommentar(er)